Free AI Tools. No Sign-Up Required. Full Access.

AI Annotation Generator

Free AI online tool to create annotations — no sign-up, perfect for students, researchers, and educators.

Combine the current tool with these other tools to work more efficiently.

Discover other tools with functions and purposes similar to the one you are currently viewing.

Discover the tools most favored and highly-rated by users on our website.

Explore more AI tools in these related categories

AI tools that automatically create text content for your images, audio, or video from user prompts.

Empower education with AI-driven tools for teaching and learning excellence.

AI tools for creating, optimizing resumes, cover letters, and other professional documents.

This is the AIFreeBox AI Annotation Generator page — an online tool designed to create clear, structured, and context-aware annotation drafts across different scenarios, from research notes to code comments and compliance reviews.

On this page, you’ll find an overview of what the tool can do, where it applies best, how to use it effectively, tips for better results, its known limitations with practical fixes, and a full FAQ section. Available on free and ultra plans.

What Can AIFreeBox AI Annotation Generator Do?

AIFreeBox AI Annotation Generator is built on transformer-based large language models, fine-tuned to handle annotation tasks with context awareness and precision. Unlike simple highlight or comment tools, it is a multi-scene annotation assistant — mode-driven, structured, and designed for human–AI collaboration.

Its core value lies in producing annotations that are clear, traceable, and professionally useful across domains such as research, code documentation, compliance, and content optimization. Users choose from 12 distinct annotation modes, while the system adapts style and structure to the task.

With support for 33 languages, it offers reliable annotations that balance automation with user oversight — giving you structured outputs you can refine, review, and apply in real workflows.

AIFreeBox Annotation Generator vs. Simple Annotation Tools

| Core Value | AIFreeBox AI Annotation Generator | Simple Annotation Tools |

|---|---|---|

| Meeting User Needs | Serves researchers, developers, creators, and enterprises: citations, code docs, metadata, compliance notes. |

General notes/highlights only; no domain-specific support. |

| Structured Output | Each annotation = Quote + Note + Warning. Supports bullet or JSON for reuse and parsing. |

Free-form comments; inconsistent and hard to repurpose. |

| Professional & Compliance Ready | Built-in ⚠️ Human review for sensitive fields; strict “no fabrication”. | No compliance awareness; subjective, incomplete remarks. |

| Cross-Language & Multi-Scene | 30+ languages; 12 annotation modes; Domain Packs for easy extension. | Usually single language; no styles or modes. |

| Efficiency & Consistency | Cuts repetitive work; consistent outputs for team workflows. | Manual entry; quality varies by individual. |

Recommended Use Cases and Pain Points Solved

| Audience | Use Case | Pain Point Solved |

|---|---|---|

| Researchers | Summarize articles, highlight evidence, build glossaries | Manual annotations are slow and often inconsistent |

| Developers | Add code comments, generate API notes, document functions | Documentation gaps cause confusion and onboarding delays |

| Writers & Editors | Produce structured summaries, highlight themes, tag content | Hard to keep notes consistent across multiple drafts |

| Organizations | Review policies, annotate reports, highlight obligations | Complex documents are hard to track and interpret quickly |

| Educators | Prepare lecture notes, mark learning materials, build glossaries | Notes scattered across sources, lacking a unified format |

| Students | Highlight study texts, extract key points, define terms | Time-consuming to prepare revision notes effectively |

| Reviewers | Analyze feedback, classify comments, group by topic | Large volumes of feedback are difficult to process manually |

| Data Teams | Tag datasets, label entities, organize text for training | Manual labeling is costly and prone to inconsistency |

How to Do Annotations With AIFreeBox AI:

Step-by-Step Guide

Step 1 — Provide Your Content

Paste the text or code you want annotated. Include enough context so the system can detect key elements and objectives.

Step 2 — Choose Annotation Mode

Select one annotation mode that matches your task. Options include Key Points, Inline Explanations, Definitions, Evidence, Action Items, Code Comments, Data Labeling, and Aspect & Sentiment.

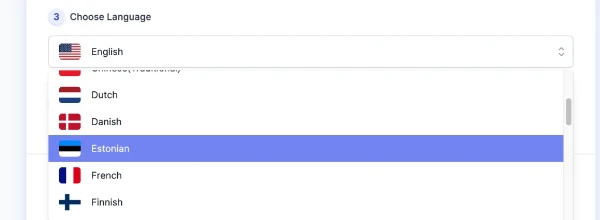

Step 3 — Choose Language

Pick the target output language. The tool supports over 30 languages, making it suitable for global use.

Step 4 — Adjust Creativity Level

Use the slider to balance precision and variation. A mid-level is best for consistency, while higher levels allow more flexible phrasing.

Step 5 — Compliance Reminder

For sensitive domains such as healthcare or legal texts, always include a clear disclaimer. All outputs in these areas must go through a human review process before being applied in practice.

Step 6 — Generate and Review

Click Generate to produce annotations. Each result follows the structure Quote + Note + Warning. Use Copy to move results into your work or Download to save them.

Step 7 — Report Bug & Get Support

If something looks wrong, use the Report Bug button. A real support team monitors reports and responds quickly to resolve issues. Include your input text, selected mode, and a brief description of the problem.

⚠️ Important: All AI-generated annotations are drafts only. They must be reviewed, verified, and refined by a human before use in any professional or decision-making context. Do not rely on them as final or authoritative outputs.

Tips for Better Annotations

- Be Specific with Input: Provide focused text with enough context; avoid unrelated content that confuses results.

- Pick the Right Mode: Match your task with one annotation mode; single-mode output is clearer and easier to refine.

- Keep Prompts Concise: Short, well-structured input produces sharper notes than long, unorganized text.

- Use Mid-Level Creativity: Balanced creativity often delivers the most accurate and adaptable annotations.

- Review and Adjust: Treat AI output as a starting point; edit and refine until it fully matches your intent.

User Case Study: From Input to Final Annotation

Case Example — Research Notes

Background Pain Point: Researchers often spend hours highlighting findings across multiple papers. Ensuring consistency and accuracy in evidence notes is time-consuming and prone to errors.

Input: A short passage from an academic article.

Chosen Mode: Evidence & Citations

AI Output:

– Quote: “According to Smith (2020)…”

Note: This provides evidence that climate change is linked to glacier melting.

Warning: ⚠️ Needs human review before citation use.

User Review: The researcher verifies the original paper, adjusts the note for clarity, and ensures citation formatting matches the rest of the review.

Final Application: The refined note is added into a literature review, ensuring consistent references and easier synthesis of findings.

Reminder: This example is a simplified demonstration inspired by real workflows. AI assists with drafts, but human review and refinement are always required before final use.

Styles System Overview

- Key Points 📌 — Summarize main ideas in concise notes.

- Inline Explanations 📝 — Clarify text line by line in plain words.

- Definitions & Glossary 📚 — Define terms and build a quick glossary.

- Evidence & Citations 🔗 — Attach references and highlight supporting details.

- Compliance Notes 🛡️ — Flag obligations, rules, or important conditions.

- Risk & Issues ⚠️ — Highlight potential risks, edge cases, and concerns.

- Action Items ✅ — Extract tasks, responsibilities, and deadlines.

- Code Comments 💻 — Explain functions, logic, and parameters in code.

- API Docstrings 🧾 — Generate structured notes for parameters, returns, and usage.

- Data Labeling (ML) 🏷️ — Tag entities, intents, or categories for datasets.

- SEO Metadata 🔍 — Create titles, descriptions, and structured hints.

- Aspect & Sentiment 🗂️ — Tag aspects and sentiment in reviews or feedback.

In total, 12 distinct styles cover a wide range of annotation needs, giving users flexible and structured options for different tasks.

Limitations & Solutions

| Limitation | What You May Notice | Solution |

|---|---|---|

| Context Gaps | Output may miss details if input text is too short or unclear. | Provide richer context or split text into smaller sections. |

| Over-General Notes | Some annotations may feel too broad or repetitive. | Choose a more precise mode or lower creativity level. |

| Language Nuances | In some languages, phrasing may sound unnatural. | Edit wording manually; refine translations as needed. |

| Technical Accuracy | Formulas, definitions, or references may need corrections. | Always verify technical content with trusted sources. |

| Human Review Required | Drafts are not final; risk of small errors or omissions. | Treat outputs as starting points; review before real use. |

FAQs

Can I rely on the tool for final annotations?

No. All annotations are drafts. They should be reviewed, verified, and edited before real use. The tool provides structured starting points, not final outputs.

Does the tool work for different domains?

Yes. You can choose from 12 annotation modes and apply them across research, writing, code documentation, policy notes, and more. The system adapts style, but accuracy still depends on your input and review.

How many languages are supported?

The tool supports over 30 languages. Quality may vary, so it is best to refine phrasing manually if the output feels unnatural.

What if I need annotations for healthcare or legal texts?

⚠️ Outputs in these areas must be treated with caution. Always add a disclaimer and follow a strict human review process. The tool cannot replace expert judgment in regulated domains.

What if the generated notes are too general?

Try using a more specific annotation mode, lower the creativity setting, or provide more detailed input. Clearer context leads to sharper results.

Can I export or reuse the results?

Yes. You can copy results directly or download them for use in your workflow. Always check and refine before sharing or publishing.

How do I report an issue or unexpected output?

Use the Report Bug button. A real support team reviews each report and provides help. This feedback loop ensures continuous improvement.

Creator’s Note

The AI Annotation Generator was designed with a clear idea: AI should not replace human judgment, but support it.

Annotations are often about context, nuance, and responsibility — things that require human oversight. This tool helps by giving you a structured starting point, reducing the repetitive work, and making your review process faster and more consistent.

Every annotation it produces is a draft, meant to be checked, adapted, and improved.

The real value comes from the collaboration: AI handles the routine structure, and you provide the critical insight. In this way, the tool stays practical, transparent, and trustworthy.

2025-10-23

🧾 Try it out now — free, online, and ready when you are.

Streamline your reading — let AI help you generate clear, insightful annotations that make learning and analysis easier.